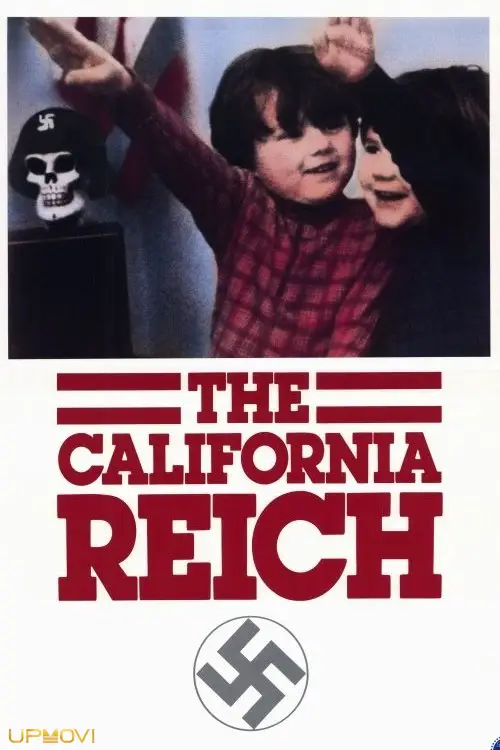

The California Reich

Film The California Reich

A documentary on the roots of nazism In America.

No trailer available.

1-Month Subscription

71 thousand Toman

۲۰٪ discount

59 thousand Toman

31 day

3-Month Subscription

215 thousand Toman

۲۰٪ discount

179 thousand Toman

90 day

POPULAR CHOICE

6-Month Subscription

407 thousand Toman

۲۰٪ discount

339 thousand Toman

180 day

1-Year Subscription

719 thousand Toman

۲۰٪ discount

599 thousand Toman

365 day